CATARACTS Semantic Segmentation 2020

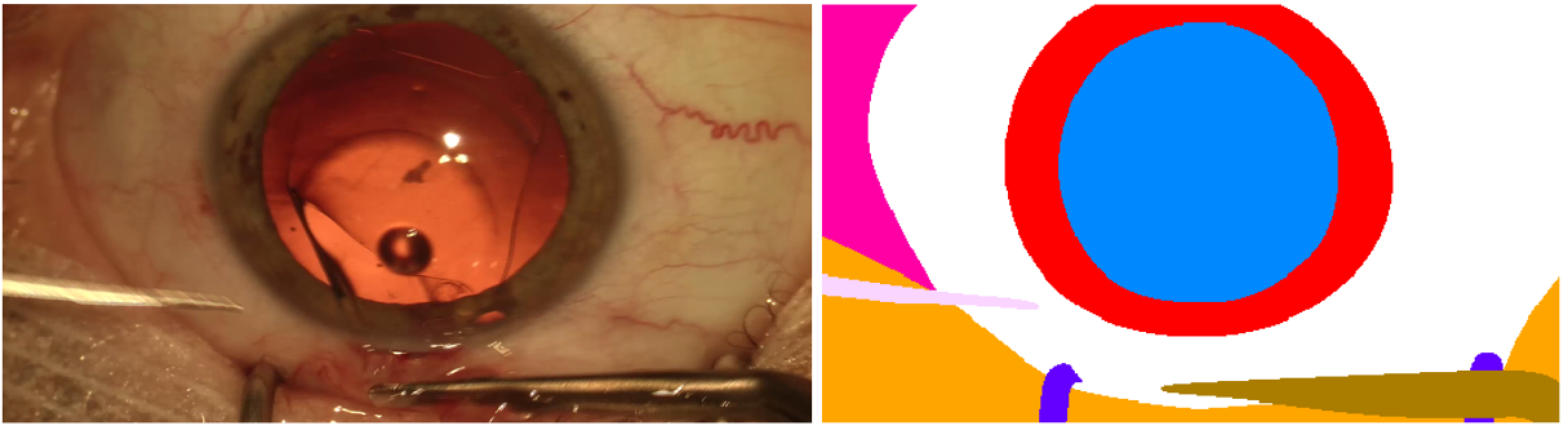

Video signals provide a wealth of information about surgical procedures and are the main sensory cue for surgeons. Video processing and understanding can be used to empower computer assisted interventions (CAI) as well as the development of detailed post-operative analysis of the surgical intervention. A fundamental building block to such capabilities is the ability to understand and segment video frames into semantic labels that differentiate and localize tissue types and different instruments. Deep learning has advanced semantic segmentation techniques dramatically in recent years. Different papers have proposed and studied deep learning models for the task of segmenting color images into body organs and instruments. These studies are however performed different dataset and different level of granualirities, like instrument vs. background, instrument category vs background and instrument category vs body organs. In this challenge, we create a fine-grained annotated dataset that all anatomical structures and instruments are labelled to allow for a standard evaluation of models using the same data at different granularities. We introduce a high quality dataset for semantic segmentation in Cataract surgery. We generated this dataset from the CATARACTS challenge dataset, which is publicly available.

The CATARACTS Semantic Segmentation sub-challenge is part of the Endoscopic Vision Challenge, MICCAI 2020.